I first heard about Big Objects in a webinar and at first I didn’t really see a use case, and it was in BETA so I didn’t care that much but now that it was released in Winter ‘18 everything changed.

My favourite Trailhead Badge is still the Electric Imp IoT one and I had thought it would be fun to store the temperature readings over a longer period of time. Since I run my integration in a Developer Editions I have 5 MB of storage available, this is not that much given that I receive between 1 and 2 Platform Events per minute.

Most objects in Salesforce uses 2 KB per object (details here) so with 5 MB I can store about 2500 objects (less actually since I have other objects in the org).

Big Objects gives you a 1 000 000 object limit so this should be enough for about 1 years worth of readings. Big Objects are meant for archiving and you can’t delete them actually so I have no idea what will happen when I hit the limit but I’ll write about it then.

Anyways, there are some limitations on Big Objects:

- You can’t create them from the Web Interface

- You can’t run Triggers/Workflows/Processes on them

- You can’t create a Tab for them

The only way to visualise them is to build a Visualforce Page or a Lightning Component and that’s exactly what I’m going to do in this blog post.

Archiving the data

Starting out, I’m creating the Big Object using the Metadata API. The object looks very similar to a standard object and I actually stole my object definition for a custom object called Fridge_Reading_Daily_History__c. The reason why I had to create that object is that I can’t create a Big Object from a trigger and I want to store every Platform Event.

The Fridge_Reading_Daily_History__c has the same fields as my Platform Event (described here) and I’m going to create a Fridge_Reading_Daily_History__c object from every Platform Event received.

The Big Object definition looks like this:

<customobject xmlns="http://soap.sforce.com/2006/04/metadata">

<deploymentstatus>Deployed</deploymentstatus>

<fields>

<fullname>DeviceId__c</fullname>

<externalid>false</externalid>

<label>DeviceId</label>

<length>16</length>

<required>true</required>

<type>Text</type>

<unique>false</unique>

</fields>

<fields>

<fullname>Door__c</fullname>

<externalid>false</externalid>

<label>Door</label>

<length>9</length>

<required>true</required>

<type>Text</type>

<unique>false</unique>

</fields>

<fields>

<fullname>Humidity__c</fullname>

<externalid>false</externalid>

<label>Humidity</label>

<precision>10</precision>

<required>true</required>

<scale>4</scale>

<type>Number</type>

<unique>false</unique>

</fields>

<fields>

<fullname>Temperature__c</fullname>

<externalid>false</externalid>

<label>Temperature</label>

<precision>10</precision>

<required>true</required>

<scale>5</scale>

<type>Number</type>

<unique>false</unique>

</fields>

<fields>

<fullname>ts__c</fullname>

<externalid>false</externalid>

<label>ts</label>

<required>true</required>

<type>DateTime</type>

</fields>

<label>Fridge Reading History</label>

<plurallabel>Fridge Readings History</plurallabel>

</customobject>

Keep in mind that after you have created it you can’t modify that much of it so you need to remove it (can be done from Setup) and then deploy again.

In my previous post I created a Trigger that updated my SmartFridge__c object for every Platform Event, this works fine but with Winter ‘18 you can actually create Processes that handles the Platform Events so I changed this. Basically you create a Process that listens to a Fridge_Reading__e object and finds the SmartFridge__c with the same DeviceId__c.

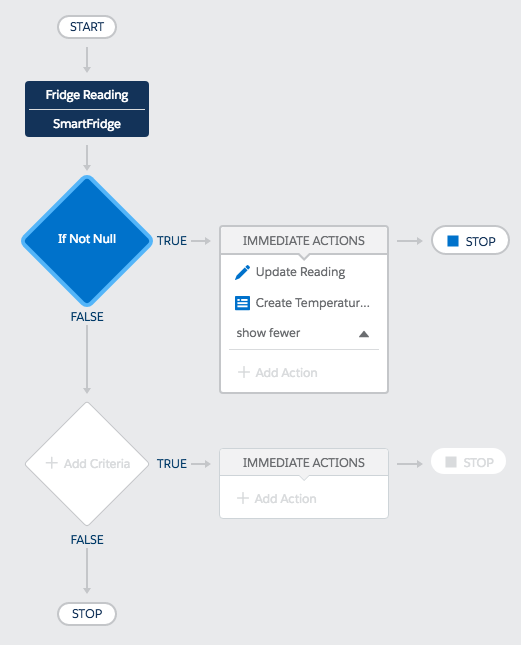

This is what my process looks like:

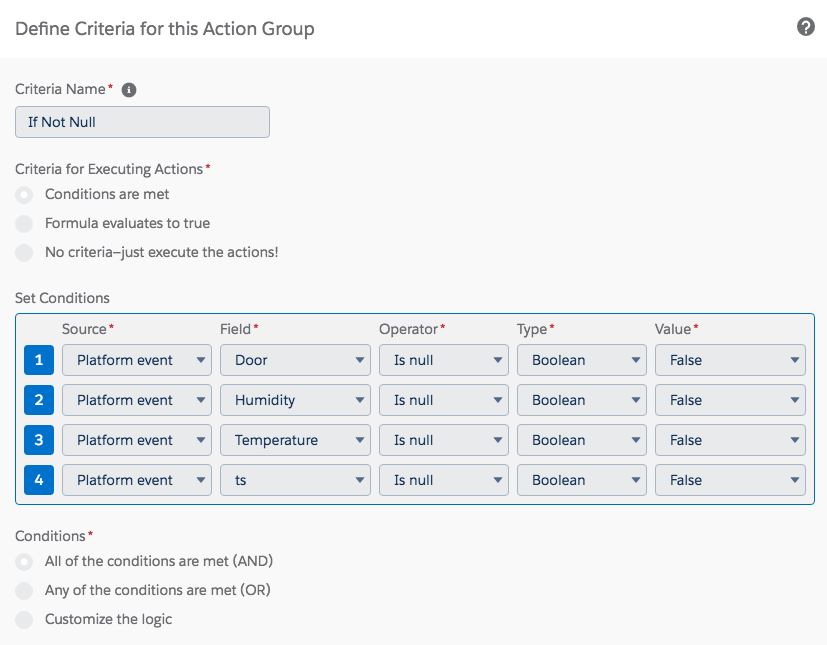

I added a criteria to check that no fields were null (I set them as required on my Fridge_Reading_Daily_History__c object)

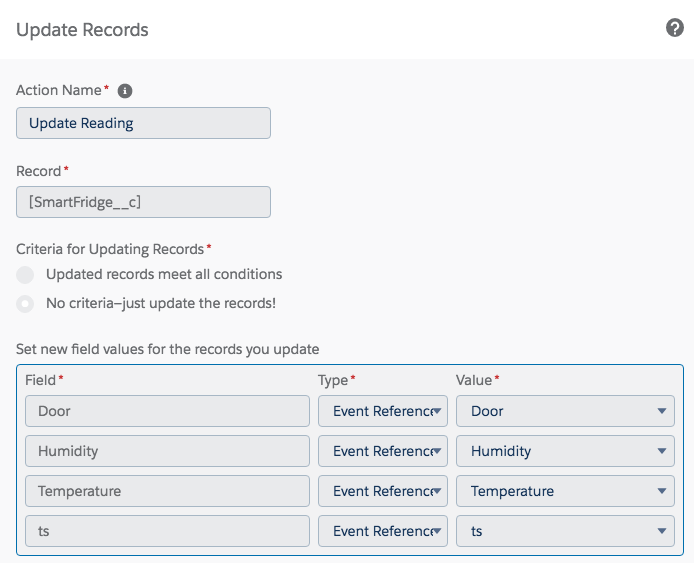

Then I update my SmartFridge__c object

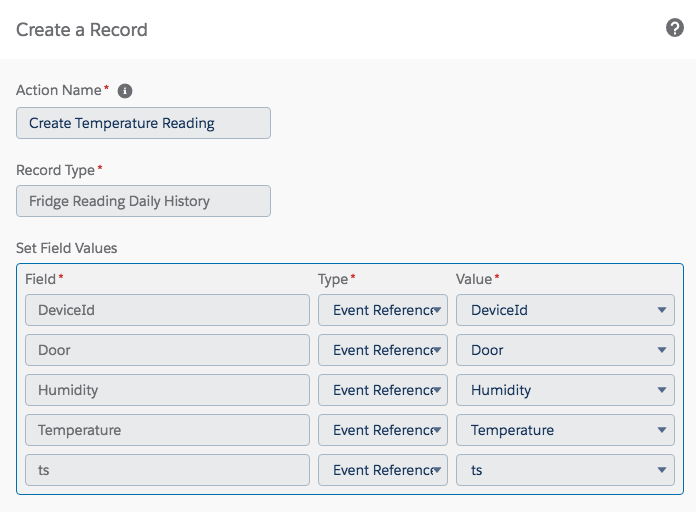

And create a new Fridge_Reading_Daily_History__c object

So far so good, now I have to make sure I archive my Fridge_Reading_Daily_History__c objects before I run out of space.

After trying different ways to do this (Scheduled Apex) I realised that I can’t archive and delete the objects in the same transaction (it’s in the documentation for Big Objects) and I don’t want to have a scheduled job every hour that archives to Big Object and then another Scheduled Apex job that deletes the Fridge_Reading_Daily_History__c that have been archived.

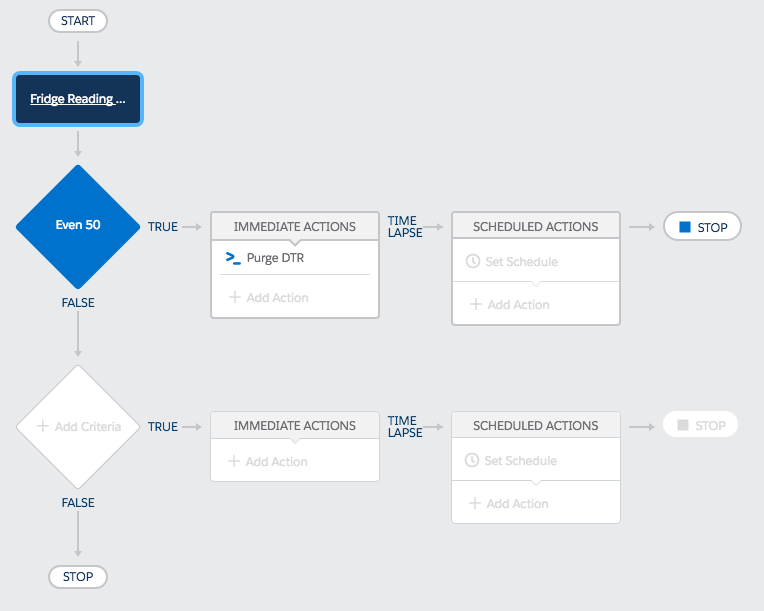

In the end I settled for a Process on Fridge_Reading_Daily_History__c that runs when an object is created

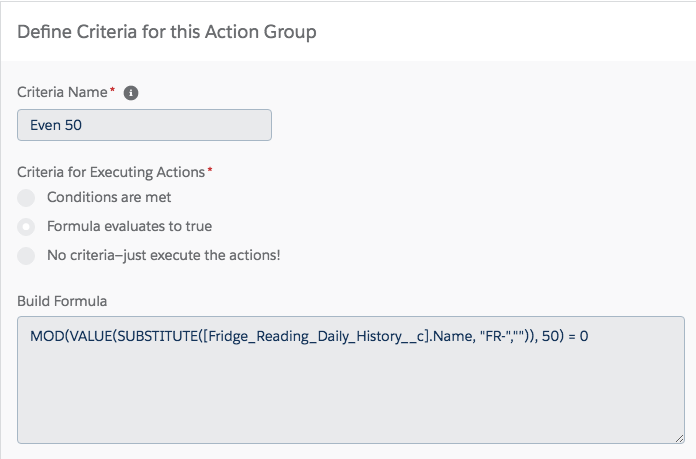

The process checks if the Name of the object (AutoNumber) is evenly divisible by 50

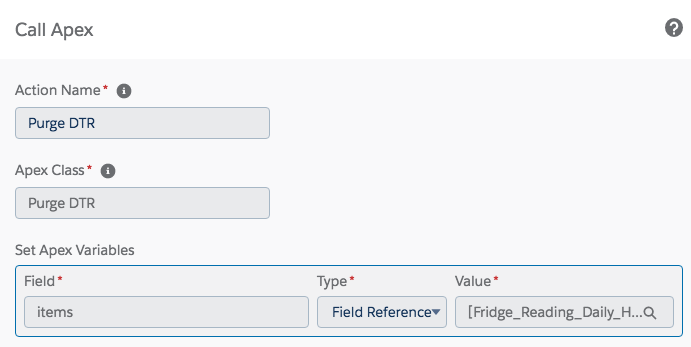

If so it calls an Invocable Apex function

And the Apex code looks like this:

/**

* Created by Johan Karlsteen on 2017-10-08.

*/

public class PurgeDailyFridgeReadings {

@InvocableMethod(label='Purge DTR' description='Purges Daily Temperature Readings')

public static void purgeDailyTemperatureReadings(List items) {

archiveTempReadings();

deleteRecords();

}

@future(callout = true)

public static void deleteRecords() {

Datetime lastReading = [SELECT DeviceId__c, Temperature__c, ts__c FROM Fridge_Reading_History__b LIMIT 1].ts__c;

for(List readings :

[SELECT Id FROM Fridge_Reading_Daily_History__c WHERE ts__c <: lastReading]) {

delete(readings);

}

}

@future(callout = true)

public static void archiveTempReadings() {

Datetime lastReading = [SELECT DeviceId__c, Temperature__c, ts__c FROM Fridge_Reading_History__b LIMIT 1].ts__c;

for(List toArchive : [SELECT Id,ts__c,DeviceId__c,Door__c,Temperature__c,Humidity__c

FROM Fridge_Reading_Daily_History__c]) {

List updates = new List();

for (Fridge_Reading_Daily_History__c event : toArchive) {

Fridge_Reading_History__b frh = new Fridge_Reading_History__b();

frh.DeviceId__c = event.DeviceId__c;

frh.Door__c = event.Door__c;

frh.Humidity__c = event.Humidity__c;

frh.Temperature__c = event.Temperature__c;

frh.ts__c = event.ts__c;

updates.add(frh);

}

Database.insertImmediate(updates);

}

}

}

This class will call the 2 future methods that will archive and delete. Yes they might not run in sequence but it doesn’t really matter. Also you might wonder why there’s a (callout = true) on the futures. I got an Callout Exception when trying to run it so I guess that the data is not stored inside Salesforce but rather in Heroku or similar and it needs to callout to get the data (I got the error on the SELECT line).

Big Objects is probably implemented like External Objects which makes sense.

The Visualisation is done in the next post: Visualise Big Object data in a Lightning Component

Cheers, Johan